How to Build a Real-Time Wildlife Camera Trap with Jetson Nano and YOLOv5

Prerequisites

Before diving into this project, ensure you have experience with Linux command line operations, Python programming, and basic computer vision concepts. You should be comfortable flashing SD cards, working with GPIO pins, and understanding network configurations.

Your development environment should include a computer capable of running the Jetson Nano setup tools, and you'll need a reliable internet connection for downloading dependencies and models. Familiarity with PyTorch and basic machine learning workflows will be beneficial, though not strictly required.

Additionally, you should have access to an outdoor location where you can safely deploy the camera trap for testing. Understanding of wildlife photography ethics and local regulations regarding camera placement is essential for responsible deployment.

Parts & Components

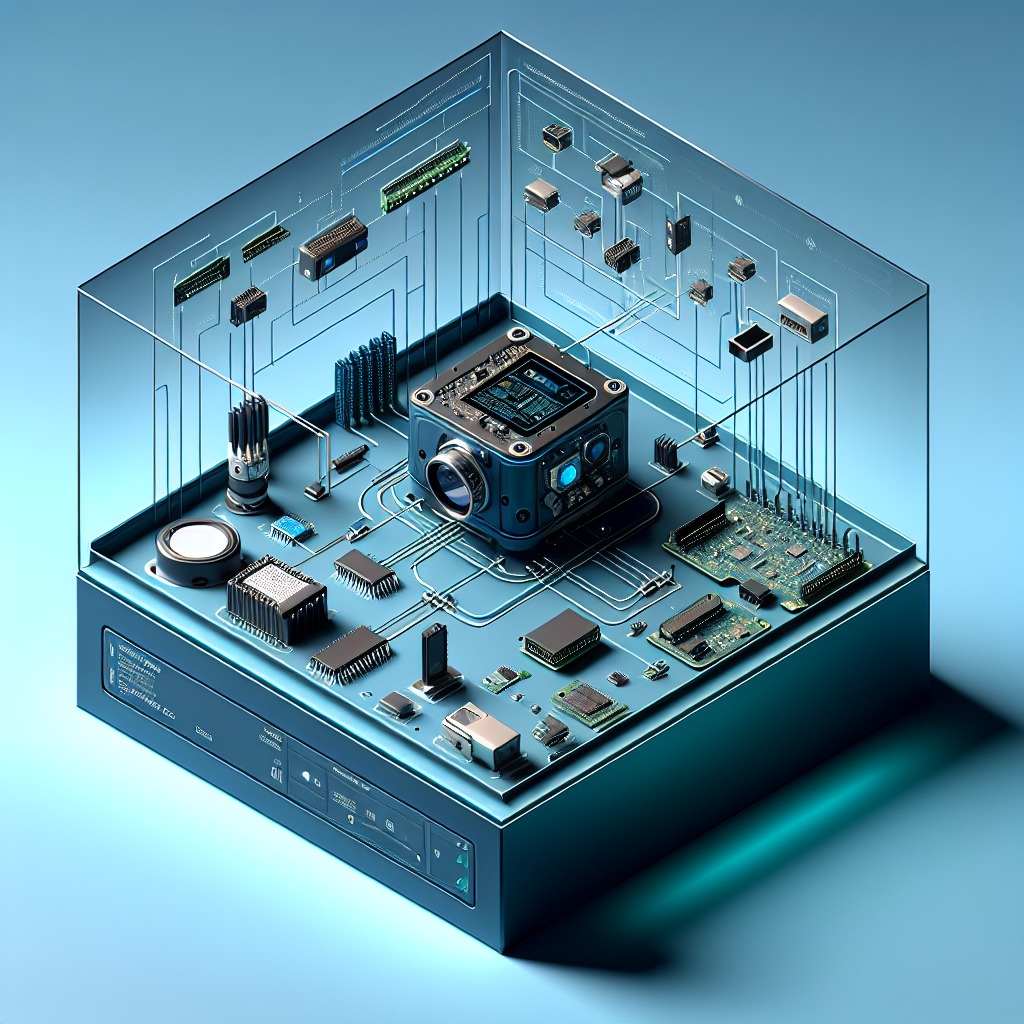

The hardware components required for this project include:

- NVIDIA Jetson Nano Developer Kit - The main computing unit

- SanDisk 64GB microSD card - For the operating system and storage

- Logitech C920 USB webcam - Or any USB camera compatible with V4L2

- 5V 4A power supply - Barrel jack connector for Jetson Nano

- Weatherproof enclosure - IP65 rated or higher

- Passive infrared (PIR) motion sensor - HC-SR501 recommended

- Jumper wires and breadboard - For PIR sensor connections

- 32GB USB flash drive - For additional storage and image backup

- Portable power bank - 20,000mAh capacity minimum for field deployment

Software requirements include:

- JetPack SDK 4.6 - NVIDIA's comprehensive SDK

- Python 3.6+ - Comes pre-installed with JetPack

- PyTorch 1.10+ - With CUDA support for Jetson

- OpenCV 4.5+ - For image processing and camera interface

- YOLOv5 - Ultralytics implementation

- Additional Python packages - NumPy, Pillow, RPi.GPIO

Step-by-Step Guide

1. Set Up the Jetson Nano

Flash the JetPack image to your microSD card using the NVIDIA SDK Manager or Balena Etcher. Insert the card into the Jetson Nano and complete the initial setup process. Ensure you enable the maximum power mode for optimal performance.

sudo nvpmodel -m 0

sudo jetson_clocksUpdate the system and install essential dependencies:

sudo apt update && sudo apt upgrade -y

sudo apt install -y python3-pip git cmake build-essential

sudo apt install -y libopencv-dev python3-opencv

sudo apt install -y v4l-utils2. Install PyTorch and YOLOv5

Install the appropriate PyTorch version for Jetson Nano with CUDA support:

wget https://nvidia.box.com/shared/static/fjtbno0vpo676a25cgvuqc1wty0fkkg6.whl -O torch-1.10.0-cp36-cp36m-linux_aarch64.whl

pip3 install torch-1.10.0-cp36-cp36m-linux_aarch64.whl

pip3 install torchvisionClone and set up the YOLOv5 repository:

git clone https://github.com/ultralytics/yolov5.git

cd yolov5

pip3 install -r requirements.txt3. Configure the Camera and PIR Sensor

Connect the PIR sensor to the Jetson Nano GPIO pins. Use GPIO pin 18 for the sensor output, 5V for power, and ground. Test the camera connection:

v4l2-ctl --list-devices

v4l2-ctl --device=/dev/video0 --allCreate a test script to verify camera functionality:

import cv2

cap = cv2.VideoCapture(0)

cap.set(cv2.CAP_PROP_FRAME_WIDTH, 1920)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, 1080)

ret, frame = cap.read()

if ret:

cv2.imwrite('test_image.jpg', frame)

print("Camera test successful")

else:

print("Camera test failed")

cap.release()4. Create the Main Wildlife Detection Script

Develop the core application that integrates motion detection, camera capture, and YOLOv5 inference:

#!/usr/bin/env python3

import cv2

import torch

import numpy as np

import time

import os

import json

from datetime import datetime

from pathlib import Path

import RPi.GPIO as GPIO

class WildlifeCameraTrap:

def __init__(self, config_file='config.json'):

self.load_config(config_file)

self.setup_gpio()

self.setup_camera()

self.setup_model()

self.setup_directories()

def load_config(self, config_file):

"""Load configuration from JSON file"""

default_config = {

"pir_pin": 18,

"camera_index": 0,

"image_width": 1920,

"image_height": 1080,

"model_path": "yolov5s.pt",

"confidence_threshold": 0.4,

"target_classes": ["bird", "cat", "dog", "horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe"],

"save_all_detections": True,

"max_daily_images": 1000

}

if os.path.exists(config_file):

with open(config_file, 'r') as f:

user_config = json.load(f)

default_config.update(user_config)

self.config = default_config

def setup_gpio(self):

"""Initialize GPIO for PIR sensor"""

GPIO.setmode(GPIO.BCM)

GPIO.setup(self.config['pir_pin'], GPIO.IN)

def setup_camera(self):

"""Initialize camera with optimal settings"""

self.cap = cv2.VideoCapture(self.config['camera_index'])

self.cap.set(cv2.CAP_PROP_FRAME_WIDTH, self.config['image_width'])

self.cap.set(cv2.CAP_PROP_FRAME_HEIGHT, self.config['image_height'])

self.cap.set(cv2.CAP_PROP_AUTOFOCUS, 1)

# Warm up camera

for _ in range(10):

self.cap.read()

def setup_model(self):

"""Load YOLOv5 model"""

self.model = torch.hub.load('ultralytics/yolov5', 'yolov5s', pretrained=True)

self.model.conf = self.config['confidence_threshold']

# Use GPU if available

if torch.cuda.is_available():

self.model.cuda()

def setup_directories(self):

"""Create necessary directories"""

self.base_dir = Path("wildlife_captures")

self.images_dir = self.base_dir / "images"

self.logs_dir = self.base_dir / "logs"

self.images_dir.mkdir(parents=True, exist_ok=True)

self.logs_dir.mkdir(parents=True, exist_ok=True)

def detect_motion(self, timeout=30):

"""Wait for motion detection with timeout"""

start_time = time.time()

while time.time() - start_time < timeout:

if GPIO.input(self.config['pir_pin']):

return True

time.sleep(0.1)

return False

def capture_and_analyze(self):

"""Capture image and run wildlife detection"""

ret, frame = self.cap.read()

if not ret:

return None, []

# Run YOLOv5 inference

results = self.model(frame)

detections = results.pandas().xyxy[0]

# Filter for target wildlife classes

wildlife_detections = detections[

detections['name'].isin(self.config['target_classes'])

]

return frame, wildlife_detections

def save_detection(self, frame, detections, motion_triggered=True):

"""Save image and detection metadata"""

timestamp = datetime.now()

date_str = timestamp.strftime("%Y-%m-%d")

time_str = timestamp.strftime("%H-%M-%S")

# Create daily directory

daily_dir = self.images_dir / date_str

daily_dir.mkdir(exist_ok=True)

# Check daily limit

existing_images = len(list(daily_dir.glob("*.jpg")))

if existing_images >= self.config['max_daily_images']:

return False

# Generate filename

trigger_type = "motion" if motion_triggered else "scheduled"

filename = f"{time_str}_{trigger_type}.jpg"

image_path = daily_dir / filename

# Draw bounding boxes on image

annotated_frame = frame.copy()

for _, detection in detections.iterrows():

x1, y1, x2, y2 = int(detection['xmin']), int(detection['ymin']), int(detection['xmax']), int(detection['ymax'])

label = f"{detection['name']} {detection['confidence']:.2f}"

cv2.rectangle(annotated_frame, (x1, y1), (x2, y2), (0, 255, 0), 2)

cv2.putText(annotated_frame, label, (x1, y1-10), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 0), 2)

# Save annotated image

cv2.imwrite(str(image_path), annotated_frame)

# Save metadata

metadata = {

"timestamp": timestamp.isoformat(),

"trigger_type": trigger_type,

"detections": [

{

"class": row['name'],

"confidence": float(row['confidence']),

"bbox": [int(row['xmin']), int(row['ymin']), int(row['xmax']), int(row['ymax'])]

}

for _, row in detections.iterrows()

]

}

metadata_path = daily_dir / f"{time_str}_{trigger_type}.json"

with open(metadata_path, 'w') as f:

json.dump(metadata, f, indent=2)

return True

def log_event(self, message):

"""Log events with timestamp"""

timestamp = datetime.now().strftime("%Y-%m-%d %H:%M:%S")

log_message = f"[{timestamp}] {message}\n"

log_file = self.logs_dir / f"{datetime.now().strftime('%Y-%m-%d')}.log"

with open(log_file, 'a') as f:

f.write(log_message)

print(log_message.strip())

def run(self):

"""Main execution loop"""

self.log_event("Wildlife camera trap started")

try:

while True:

# Wait for motion detection

if self.detect_motion(timeout=60):

self.log_event("Motion detected, capturing image")

# Brief delay to let subject move into frame

time.sleep(2)

frame, detections = self.capture_and_analyze()

if frame is not None:

if len(detections) > 0:

wildlife_names = detections['name'].tolist()

self.log_event(f"Wildlife detected: {', '.join(wildlife_names)}")

self.save_detection(frame, detections, motion_triggered=True)

elif self.config['save_all_detections']:

self.log_event("Motion detected but no wildlife identified")

empty_detections = detections.iloc[0:0] # Empty dataframe with same structure

self.save_detection(frame, empty_detections, motion_triggered=True)

# Cool-down period

time.sleep(10)

else:

# Periodic capture even without motion (optional)

time.sleep(1)

except KeyboardInterrupt:

self.log_event("Camera trap stopped by user")

except Exception as e:

self.log_event(f"Error: {str(e)}")

finally:

self.cleanup()

def cleanup(self):

"""Clean up resources"""

if hasattr(self, 'cap'):

self.cap.release()

GPIO.cleanup()

self.log_event("Resources cleaned up")

if __name__ == "__main__":

trap = WildlifeCameraTrap()

trap.run()5. Create Configuration File

Create a configuration file to customize the camera trap behavior:

{

"pir_pin": 18,

"camera_index": 0,

"image_width": 1920,

"image_height": 1080,

"model_path": "yolov5s.pt",

"confidence_threshold": 0.3,

"target_classes": [

"bird", "cat", "dog", "horse", "sheep", "cow",

"elephant", "bear", "zebra", "giraffe", "person"

],

"save_all_detections": false,

"max_daily_images": 500

}6. Set Up Automatic Startup

Create a systemd service for automatic startup on boot:

sudo nano /etc/systemd/system/wildlife-trap.service[Unit]

Description=Wildlife Camera Trap

After=network.target

[Service]

Type=simple

User=your_username

WorkingDirectory=/home/your_username/wildlife_trap

ExecStart=/usr/bin/python3 /home/your_username/wildlife_trap/wildlife_trap.py

Restart=always

RestartSec=10

[Install]

WantedBy=multi-user.targetEnable and start the service:

sudo systemctl enable wildlife-trap.service

sudo systemctl start wildlife-trap.service7. Implement Remote Monitoring

Add a simple web interface for remote monitoring:

from flask import Flask, render_template, jsonify, send_file

import os

from pathlib import Path

import json

app = Flask(__name__)

@app.route('/')

def dashboard():

return render_template('dashboard.html')

@app.route('/api/recent_captures')

def recent_captures():

images_dir = Path("wildlife_captures/images")

recent_images = []

for date_dir in sorted(images_dir.glob("*"), reverse=True)[:7]:

for img_file in sorted(date_dir.glob("*.jpg"), reverse=True)[:10]:

metadata_file = img_file.with_suffix('.json')

metadata = {}

if metadata_file.exists():

with open(metadata_file, 'r') as f:

metadata = json.load(f)

recent_images.append({

'filename': str(img_file.relative_to(images_dir)),

'timestamp': metadata.get('timestamp', ''),

'detections': len(metadata.get('detections', []))

})

return jsonify(recent_images[:20])

@app.route('/api/image/')

def serve_image(filename):

image_path = Path("wildlife_captures/images") / filename

return send_file(str(image_path))

if __name__ == '__main__':

app.run(host='0.0.0.0', port=8080) Troubleshooting

Camera not detected or producing poor quality images: Verify the USB connection and power supply capacity. The Jetson Nano requires substantial power, especially when running inference. Use dmesg | grep usb to check for USB enumeration issues. Ensure your camera is V4L2 compatible and try different USB ports.

YOLOv5 model fails to load or runs slowly: This typically indicates insufficient memory or missing CUDA drivers. Check available memory with free -h and GPU status with tegrastats. Consider using a smaller model like YOLOv5n for better performance on edge devices. Ensure you're using the ARM64-compatible PyTorch wheel.

PIR sensor triggering constantly or not at all: PIR sensors require calibration and proper power supply. Check the sensitivity and delay adjustments on the sensor board. Ensure stable 5V power and proper grounding. Environmental factors like temperature changes and moving vegetation can cause false triggers.

High false positive rates: Adjust the confidence threshold in the configuration file. Consider training a custom model on your specific wildlife dataset for better accuracy. Environmental factors like shadows, leaves, and weather conditions can trigger false detections.

Storage filling up quickly: Implement automatic cleanup routines for old images. Consider using lower resolution for non-wildlife detections or implementing compression. Monitor disk usage with df -h and consider using external storage for long-term deployment.

System crashes or freezes during operation: This often indicates power supply issues or overheating. Ensure adequate cooling and a stable power source. Monitor system temperature with tegrastats and consider adding a fan or heat sink. Check system logs with journalctl -f for error messages.

Network connectivity issues for remote monitoring: Verify firewall settings and network configuration. Use netstat -tlnp to check if the Flask application is listening on the correct port. Consider using a VPN or port forwarding for remote access from outside your local network.

Conclusion

You've successfully built a sophisticated wildlife camera trap using the NVIDIA Jetson Nano and YOLOv5. This system combines motion detection, real-time AI inference, and automated data logging to create a powerful tool for wildlife monitoring and research.

The system you've created can operate autonomously in field conditions, capturing and analyzing wildlife activity with minimal human intervention. The modular design allows for easy customization and expansion based on specific research needs or deployment environments.

For next steps, consider implementing advanced features such as species-specific behavior analysis, integration with cloud storage services for automatic backup, or adding environmental sensors for comprehensive habitat monitoring. You might also explore training custom YOLOv5 models on local wildlife species for improved detection accuracy.

The remote monitoring capability enables researchers to check system status and recent captures without physically visiting the deployment site, making this solution ideal for studying sensitive wildlife habitats or conducting long-term monitoring projects.

Remember to follow local regulations and ethical guidelines when deploying camera traps in natural environments, and always prioritize animal welfare and habitat protection in your research activities.